Back

On the importance of studying the robustness and safety properties of machine learning models

Innovative research allows us to create AI solutions that exceed expectations, providing our clients with a competitive advantage and the security of their business

Researching the robustness, safety, and interpretability of machine learning models is becoming increasingly important as these technologies are more widely implemented in various aspects of our lives. The number of scientific articles on this topic exceeded 10,000 in 2024. While previously the main interest in this topic was observed among researchers, now the issues of neural network security have massively penetrated into the industry. Let's consider why this is critical and in which areas it is especially necessary to pay attention to these aspects.

Robustness of machine learning models

Robustness of models in the context of machine learning refers to the ability of a model to maintain stable performance in the presence of noise and small changes in input data, when working with data different from that on which the model was trained, and when working with data whose distribution changes over time.

Among the properties that a quality machine learning model should have in terms of robustness are:

Resistance to noise and small deviations:

The model should function correctly even in the presence of random errors or inaccuracies in the data.

Important for working with real data, which often contain measurement errors or input mistakes.

Generalization ability:

The ability of the model to effectively work with new, previously unseen data.

Critical for the application of models in dynamic environments.

Stability in the face of data distribution shift:

The ability of the model to adapt to gradual changes in the distribution of data over time.

A key factor for the long-term use of models in real conditions.

Safety of machine learning models

Safety of machine learning models relates to their ability to resist malicious attacks, manipulations, and improper use, as well as to ensure data confidentiality and ethical application.

A safe and reliable artificial intelligence should consider the following aspects:

Protection against attacks:

Resisting attempts to deceive or manipulate the model.

Maintaining stability in targeted attacks.

Interpretability:

The ability to explain the results of the model's work in a language understandable to humans.

Plays a major role in areas where important decisions can be made based on the work of neural networks.

Data confidentiality:

Protecting personal information used for training and inference.

Preventing leaks of confidential data through the model.

Ethics and fairness:

Ensuring the impartiality of the model.

Preventing discrimination and unfair decisions.

Practical areas where robustness and safety are particularly important

Medicine

Artificial intelligence finds wide application in medicine, transforming many aspects of healthcare. Among its applications are:

Disease diagnosis: analysis of medical images (X-rays, MRI, CT scans, ultrasound) and assistance in diagnosis based on symptoms and medical history

Patient condition monitoring: real-time data analysis from wearable devices

Predictive analytics: predicting disease outbreaks, assessing risks of disease development in patients, predicting treatment outcomes, and more.

Attention to the issues of robustness and safety in medical AI should be given for the following reasons:

Critical decisions: Medical decisions directly impact the health and lives of patients. Errors can lead to serious consequences, including incorrect treatment or lack of necessary treatment.

Data diversity: Medical data is often heterogeneous and can vary significantly between populations. Models must be resilient to variations in the data.

Confidentiality: Medical data is strictly confidential. Leakage or misuse of such data can have serious legal and ethical consequences.

Trust of the medical community: Doctors and patients must trust AI systems for their widespread implementation. For this, these systems' data must be safe and interpretable.

Finance

The use of artificial intelligence (AI) in the financial sector is rapidly growing, transforming traditional approaches to financial management, investments, and risks. Let's consider the main areas of AI application in finance and the importance of security and robustness in this field.

Algorithmic trading: automated execution of transactions based on market data analysis and high-frequency trading. Vulnerable machine learning systems in finance can lead to serious economic consequences for those who implement these models.

Creditworthiness evaluation: analysis of credit histories and clients' financial behavior. Such systems must be transparent and interpretable for regulators. Additionally, these models must be unbiased. Since these models also work with a vast amount of confidential information, it is important to ensure that there are no possibilities of data leaks.

Fraud detection: identifying suspicious transactions in real-time, analyzing behavioral patterns for identifying fraudsters. System robustness is critical for effective combatting of fraud.

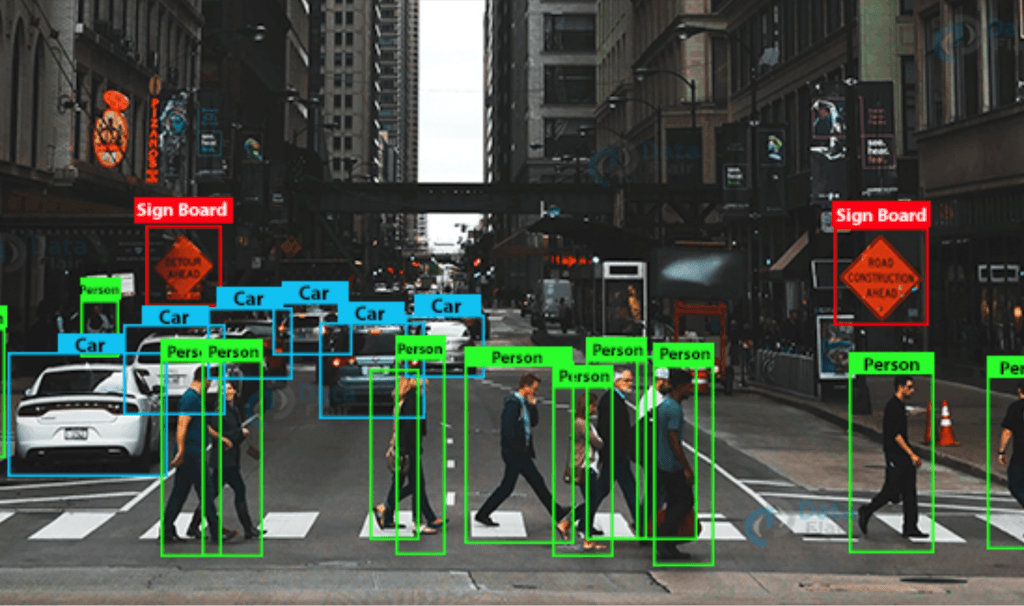

Autonomous vehicles

In the context of autonomous vehicles, robustness is vitally important, as these vehicles operate in dynamic and unpredictable conditions. They must react to sudden changes on the road: weather, pedestrians, other vehicles, road works, and so on. Unstable or incorrect AI operation can lead to accidents or other hazardous situations.

Examples of vulnerabilities:

Unexpected situations: vehicles may encounter situations that were not accounted for in AI training, such as inadequate behavior from other drivers or complex road conditions.

Noise in data: errors in sensor readings (cameras, radars, lidars) or data interpretation can lead to incorrect AI decisions.

System attacks: cyber-attacks, such as misleading road signs, can deceive the system and lead to unsafe decisions.

To increase the system's robustness, AI must be trained on a large amount of data, including rare and extreme scenarios, and have built-in mechanisms for stable behavior in the event of unexpected deviations.

The safety of autonomous vehicles depends on how effectively AI can prevent and minimize hazardous situations. Autonomous vehicles must operate in an environment where any incorrect decision can have fatal consequences for drivers, passengers, and pedestrians. This includes both collision prevention and the ability to make quick decisions in emergency situations.

Important safety aspects:

Ethical decisions: In the event of hazardous situations, AI must make decisions with minimal risk to human lives, which poses complex ethical questions for developers (for example, how to choose the least harmful outcome in an accident).

Algorithm reliability: Algorithms must be tested and verified for compliance with safety standards. Here, interpretability is extremely important.

Failure handling: In critical cases where AI cannot adequately assess the situation (for example, sensor failure or algorithm malfunction), the system must transition to a safe state or transfer control to the driver.

Industry

Artificial intelligence is swiftly changing the face of modern industry, opening new opportunities for production optimization, efficiency improvement, and innovative product creation. Let's consider the main applications of AI in industry and analyze the importance of considering security and robustness in this field.

Main areas of AI application in industry:

Predictive maintenance: predicting equipment breakdowns and reducing unplanned downtimes

Workplace safety: monitoring compliance with safety regulations, predicting and preventing accidents

Quality control: automatic detection of defects using computer vision, analysis of the reasons for defects, prediction of product quality.

The security of artificial intelligence systems in industry is important for the following reasons:

Negative economic consequences. Failures in AI operations can cause production downtimes and financial losses and can affect the quality of products and the company's reputation

Potential cyber-attacks. Industrial AI systems may be targeted by hackers. Hacking into production control systems can have catastrophic consequences

Need for reliability and fault tolerance. AI systems must be resilient to failures and errors, which can occur regularly due to unpredictable circumstances.

Aug 26, 2024